Tuan Anh Le

Human Compatible

24 April 2023

These are notes on Stuart Russell’s book, Human Compatible: AI and the Problem of Control.

With recent AI advances like GPT-4 and other large language models (LLMs), resolving the question of AI control and safety becomes more urgent. This book is a good informal introduction to the topic, although given that it was published in 2019, it doesn’t discuss the many advances in the past four years. It might be worth additionally watching some of Stuart Russell’s more recent lectures (example). Also note that this is only one of many views in the now rapidly evolving field of AI safety, see pointers to literature by David Duvenaud. Overall, it definitely conveys the message that the AI community is building something powerful that can be either very good or very bad, so we should make sure it’s the former.

The core idea is that in order to design safe AI systems, we need to make sure

- Machines maximize the realization of human preferences.

- Machines are uncertain about human preferences.

- Machines learn about human preferences from human behavior.

This is in contrast to what Russell calls the “standard model” — that machines optimize a fixed objective which comes with many problems like

- The “King Midas” problem (a.k.a. reward misspecification / reward hacking): King Midas wanted everything he touched to turn into gold. That turned out to be a bad idea since his food, family and friends turned to gold. This is already present — social media sites maximize click through rate which can accidentally change human preferences (e.g. to have more extreme views, since those are easier to predict).

- The instrumental goal problem: if the machine is asked to fetch me coffee, it will prevent itself from being switched off since it can’t fetch me coffee dead. And a more intelligent machine will be better at disabling its off switch. Disabling the off switch is an example of an instrumental goal and there are many others, like accumulating more resources or building better tools.

Other things I found interesting, in no particular order.

-

In the past (roughly around 2014), I thought that worrying about AI safety doesn’t make sense because it’s too soon (I distinctly remember Andrew Ng saying that it’s like worrying about overpopulation on Mars at NeurIPS 2015), and that the alarmists are mostly non-experts who don’t know what they’re talking about (I remember not liking the Superintelligence book because of this). I was wrong. Russell convinced me that both arguments are invalid (Chapter 6 on “The not-so-great AI debate”). The reason for worrying about AI safety doesn’t rely on the imminence of a superhuman AI. Rather, it’s about how a superhuman AI could be bad for us and how long it’ll take us to figure out how to prevent those bad outcomes. Also, dismissing arguments from non-experts is ad hominem, plus a lot of experts including Alan Turing, Marvin Minsky, Stuart Russell, David Duvenaud and more worried about AI safety.

-

Discussion of all the wonderful things we can have with AI, like better standards of living for everyone, education, healthcare, transportation, etc., which feels motivating (Chapter 3 on “How might AI progress in the future?”). Also, the statement from page 93 that “the technical community has suffered from a failure of imagination when discussing the nature and impact of superintelligent AI” is a nice reminder of not getting lost in the weeds and keeping our eyes on the pretty ambitious prize.

-

Discussion of universal basic income (UBI; page 121): it is under “misuses of AI” as it’s a consequence of people losing jobs from increased automation. Russell identifies a tension between whether humans like to “enjoy” or “strive”. If “enjoy”, UBI will work just fine. Otherwise, Russell suggests that people should ultimately focus on professions that require being human, like psychotherapists, coaches, tutors, etc. — something that machines can’t do for us, or at least, we don’t want them to be doing for us.

- Russell identifies conceptual breakthroughs for reaching artificial general intelligence (page 78) as

- Language understanding and common sense

- Cumulative learning of concepts and theories

- Discovering actions

- Managing mental activity

With ChatGPT, it is at least highly debatable whether 1 is unsolved. The example given in the book as being difficult is effortlessly solved with ChatGPT. To the extent that LLMs are not continually trained, 2 is unsolved. Planning with LLMs is still known to be poor so 3 is still unsolved. I’m not sure about 4 — but to the extent that LLMs are not planning, they’re likely not managing their mental activity. Bubeck et al. (2023) discuss GPT-4 capabilities more thoroughly.

-

The off-switch game as a toy example illustrating how the three principles above lead to a robot that is willing to be switched off (page 196). The core idea is that if a robot is uncertain about human’s preferences, it might be better off deferring to the human, who can turn it off, but even then, that is better than accidentally doing something that is bad for the human.

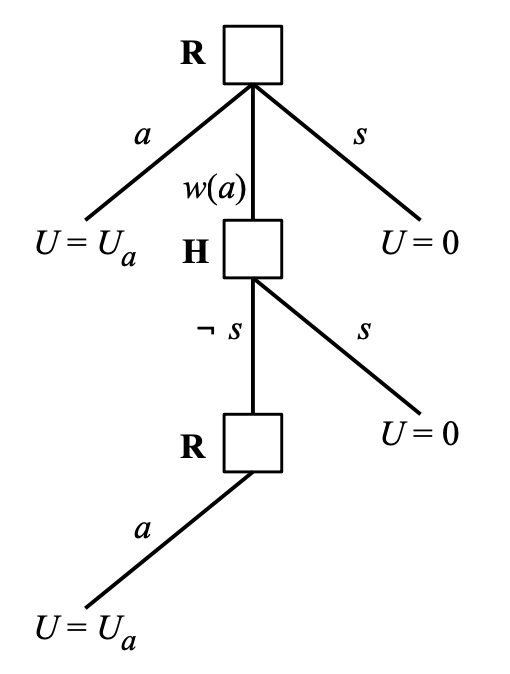

More technically (but still simplified), suppose the following structure of the off-switch game from Hadfield-Menell et al. (2016)

where the robot \(R\) goes first and can either perform an action \(a\), wait and inform the human \(H\) that it will perform an action \(a\), \(w(a)\), or switch itself off, \(s\). The human, \(H\), can either switch the robot off if the human’s utility is negative, \(s\), or not, \(\neg s\). Finally, the if the robot is not switched off, it will perform action \(a\).

Assume the robot’s belief about human’s utility \(u\) is \(p(u)\) and that switching the robot off has zero utility. The expected utilities of different actions are

- \(\mathrm{EU}(a) = \E_{p(u)}[u]\),

- \(\mathrm{EU}(w(a)) = \int_{-\infty}^0 p(u) \cdot 0 \,\mathrm du + \int_0^{\infty} p(u) \cdot u \,\mathrm du\) (since the human will switch the robot off if \(u < 0\) and otherwise the robot will go next and perform \(a\), leading to utility \(u\)),

- \(\mathrm{EU}(s) = 0\).

Therefore, since \begin{align} \mathrm{EU}(w(a)) &= \int_{-\infty}^0 p(u) \cdot 0 \,\mathrm du + \int_0^{\infty} p(u) \cdot u \,\mathrm du \nonumber\\

&= 0 + \int_0^{\infty} p(u) \cdot u \,\mathrm du \nonumber\\

&\geq \int_{-\infty}^0 p(u) \cdot u \,\mathrm du + \int_0^{\infty} p(u) \cdot u \,\mathrm du \nonumber\\

&= \E_{p(u)}[u] \nonumber\\

&= \mathrm{EU}(a), \nonumber \end{align} as long as there is a negative probability mass on \(u\) being negative, deferring to the human, who might switch off the robot, is preferable to performing \(a\) directly. -

The proposed framework for safe AI is very normative, perhaps a special case of interactive partially observable Markov decision processes (IPOMDPs). IPOMDPs are infamously expensive in both time and memory and it’s unclear how to get these ideas working in real systems. The framework is utilitarian which comes with a baggage of criticisms (which Russell tries to address). Also, at the end of the day, aren’t we maximizing a fixed objective (unknown human preferences that are learnable from human actions), so we’re back to the standard model, anyway?

- How do we “fix” many currently deployed systems (that are obviously unsafe) that don’t follow this framework? It’s very difficult to slow down research because the financial incentives, closely related to the potential of massively increased wealth and well-being, are too large. So the only way forward is solving AI safety. This is interesting in light of Stuart Russell signing Pause Giant AI Experiments: An Open Letter.

[back]