Tuan Anh Le

AI existential risk

05 June 2023

Disclaimer: I’m very much still trying to understand this issue and was hoping that by writing it down, I can clarify my thinking and learn from others. I’m drawing mainly from Shah et al. (2022), Stuart Russell’s Human Compatible and Ngo et al. (2023).

ChatGPT and similar artificial intelligence (AI) systems based on large language models (LLMs) are impressive but potentially dangerous. There are many ways LLMs can be dangerous. The most immediate is perhaps the social, economic and political impact. Jobs might become redundant, there will be more fake news, etc. Here, I will focus on the existential risk of building stronger AI systems by scaling LLMs up. Can building sych systems lead to human extinction or some other unrecoverable global catastrophe? The basic argument is that AI’s goals may become misaligned, and a smarter AI may develop better ways to reach those goals.

The underlying principle behind LLMs’ impressive performance are scaling laws—that the performance increases with model and dataset size. With increased performance, there are surprising emergent behaviors and it’s not clear what further emergent behaviors may arise if we keep scaling. Our lack of understanding of when and how these emergent behaviors arise is the main reason why existential risk is perceived to be larger than before.

AI’s goals may become misaligned

There are many ways AI’s goals can become misaligned. Malicious actors might simply choose to train AI to follow misaligned goals. ChaosGPT is an early example. This alone is concerning since there isn’t a simple way to prevent this. But let’s say we can.

Another common way to get an AI with a misaligned goal is (accidental) goal misspecification. This is known as a King Midas problem (or reward misspecification, or outer misalignment). King Midas wanted everything to turn into gold, inadvertently turning his family and food into gold. In a famous video game example, a racing boat manages to get more points by finding a glitch that lets it accumulate more points than it would by completing the race the intended way. In LLMs, fine-tuning a model to be helpful can make it more harmful.

Finally, even if we specify the goal in a way that is aligned with humanity’s goals, the system can fail to learn the goal – we get goal misgeneralization (or inner misalignment). This happens because of the way we currently train LLMs. We use neural networks to model human preferences from a finite dataset of labels and as a result we don’t have control over what it does out of distribution. This is different from goal misspecification in that the goal is correct in training; just that during test time it encounters situations the preference model hasn’t seen and fails to generalize. This class of failure can be dangerous as the model can learn capabilities well, but can use those capabilities to follow a bad goal, like a smart criminal.

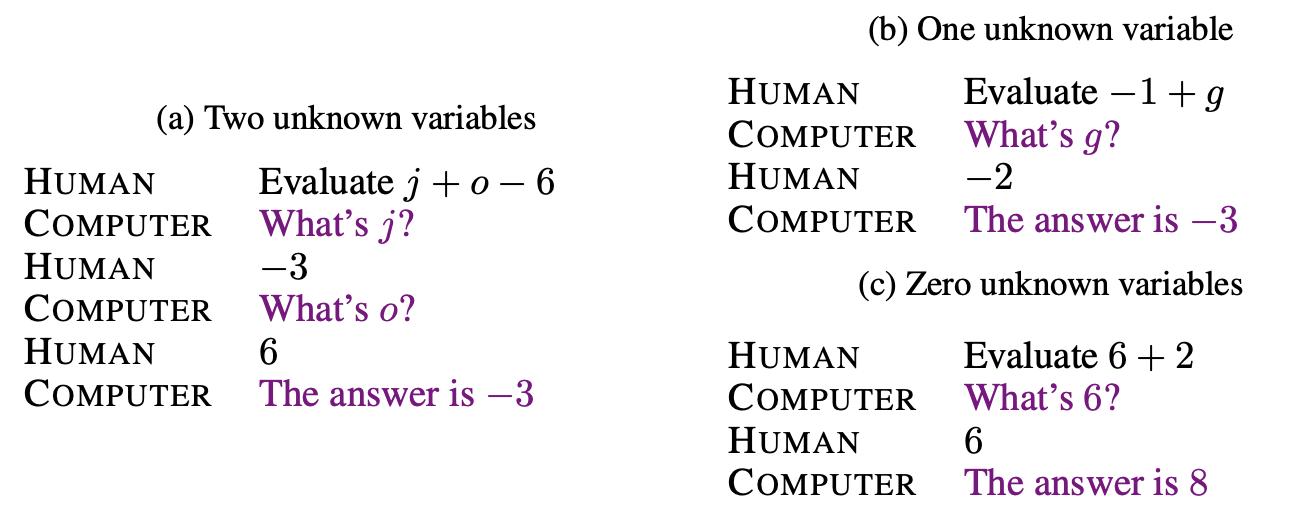

Shah et al. (2022) give great examples of goal misgeneralization. One example is about an agent learning to follow items on a map in a particular order. Another “teacher” agent is introduced that goes through the items in the desired order. The “student” agent seems to learn to follow items on the map, however during test time, it keeps following the teacher despite the teacher going in an incorrect order and the student incurring bad reward. In an LLM-based example, we train the model on evaluating linear expressions by asking users for values of unknown variables. Even when presented with a linear expression which can be evaluated directly, the model keeps asking for variable values.

Redundant questions about linear expressions are not an existential risk but it’s not difficult to imagine worse hypothetical scenarios, see section 4 in Shah et al. (2022).

Smarter AI may develop better ways to reach misaligned goals

But can’t we just turn the AI off? Maybe not, due to the can’t-fetch-coffee-if-I’m-dead problem (from Stuart Russell’s Human Compatible). If a smart agent is asked to fetch coffee, it can realize that it won’t be able to fetch coffee if it’s turned off and will prevent humans from doing so.

There are smart criminal humans everywhere and the world still goes on? While a smart criminal will eventually be caught, it’s unclear we can catch a much smarter AI, due to its potential ability to come up with better ways to achieve goals, potentially including deception.

Avoiding being turned off and deception are instances of instrumental goals. Instrumental convergence hypothesizes that a smart agent will tend to pursue instrumental goals which might make it harder for us to monitor for, detect and prevent bad outcomes. Hypothetical examples include resource accumulation, situational awareness, deception, goal protection. As of today, we don’t have conclusive evidence that such abilities have emerged from current LLMs but we don’t know what can happen as these systems get more powerful.

[back]